Over the last year I’ve been thinking of creating an app that uses people’s smartphone geolocation to tag every plant (and eventually living being) on the planet but it looks like Apple has beaten everyone to the punch in a really amazing and exciting way.

You may have noticed that in the latest iPhone Operating System (iOS) update you can now search your photos by ‘things’. I put this to the test and, considering it’s early days, it’s already doing a really good job of identifying some of the more popular flowers. It does this by shape automatically.

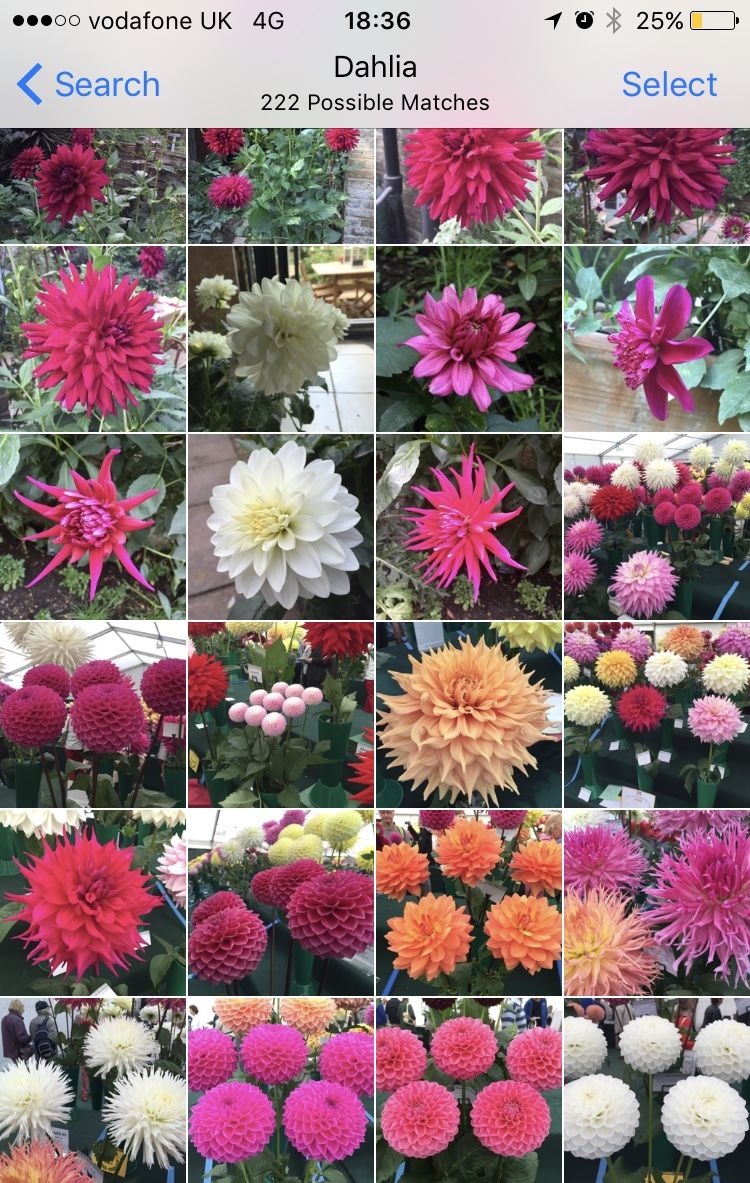

I tried searching for Dahlias (of course) and it showed me all of my Dahlia photos from across the last three years…

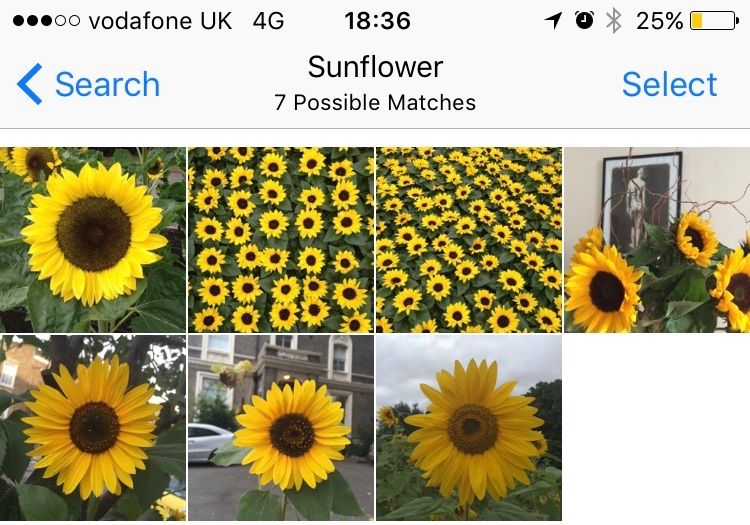

… then Sunflowers …

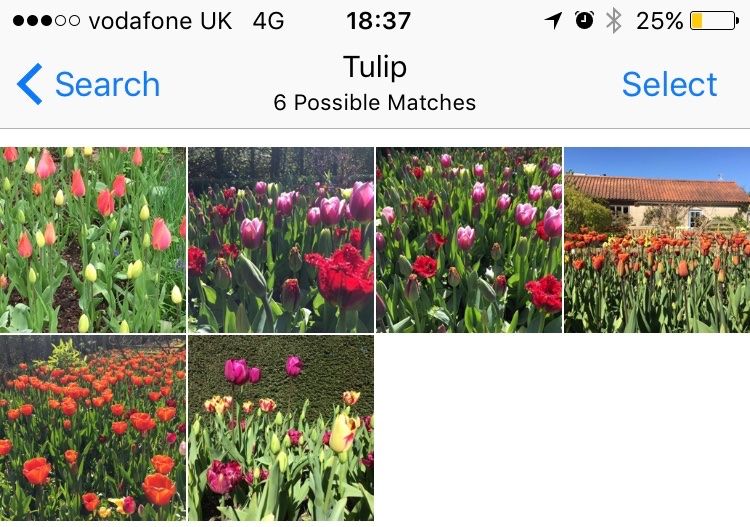

… Tulips …

… Daffodils…

… Maple trees …

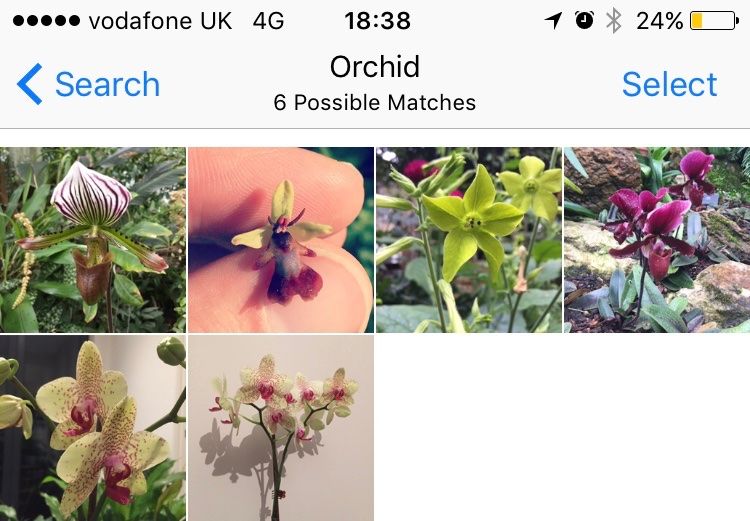

… and most impressively (if you ignore the Nicotiana) it even identified both British wild Orchids and our house moth orchid…

It’s not perfect yet by any means and you can’t search for many plant types yet. Even so, it’s exciting what it is already doing and what that means for the future. I then decided to see if it can do animals and insects, and it can.

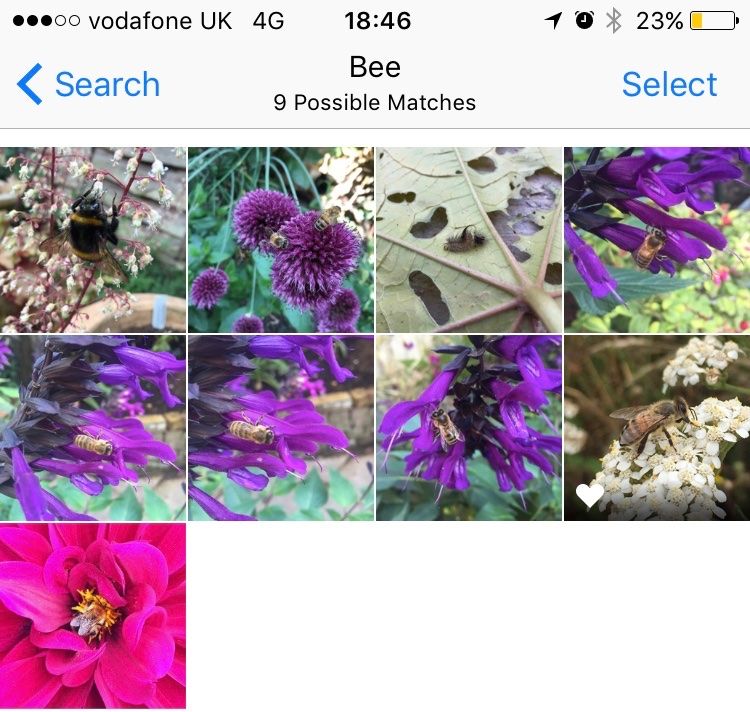

Bees…

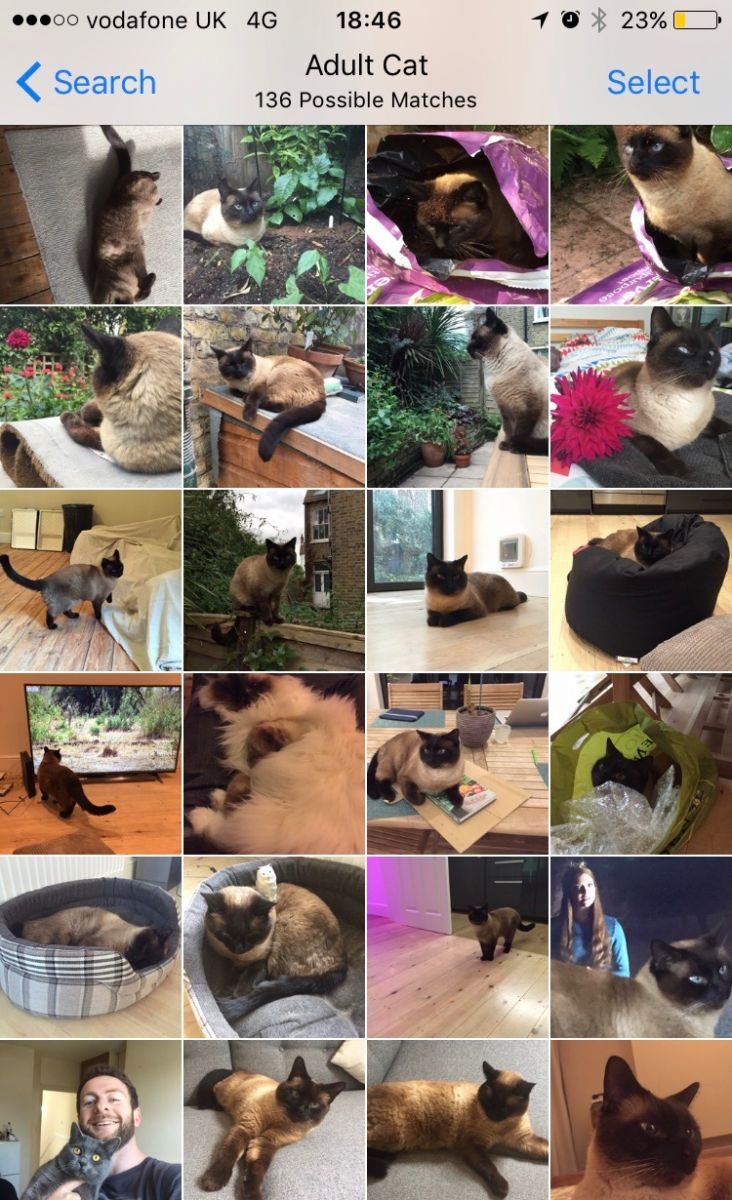

… and differentiating between kittens and adult cats …

Considering this has only recently launched, in a year or two the possibility of how this will work and be used is mind-boggling. Especially as these photos were all taken across years and are geotagged as standard.

It gets better with machine learning

The way it works is that Apple employs ‘machine learning’ where computers analyse the patterns, colours and shapes of images and records them. People at Apple categorise these once a pattern has been matched and is working, e.g. a pattern for the shape of a Dahlia or Orchid.

Very quickly, Apple will be able to identify species and cultivars by the use of shape and colours. If you know a flower is a Dahlia, it’s a small step to identify cultivars based on shades and shapes of the flower type (e.g. cactus vs decorative).

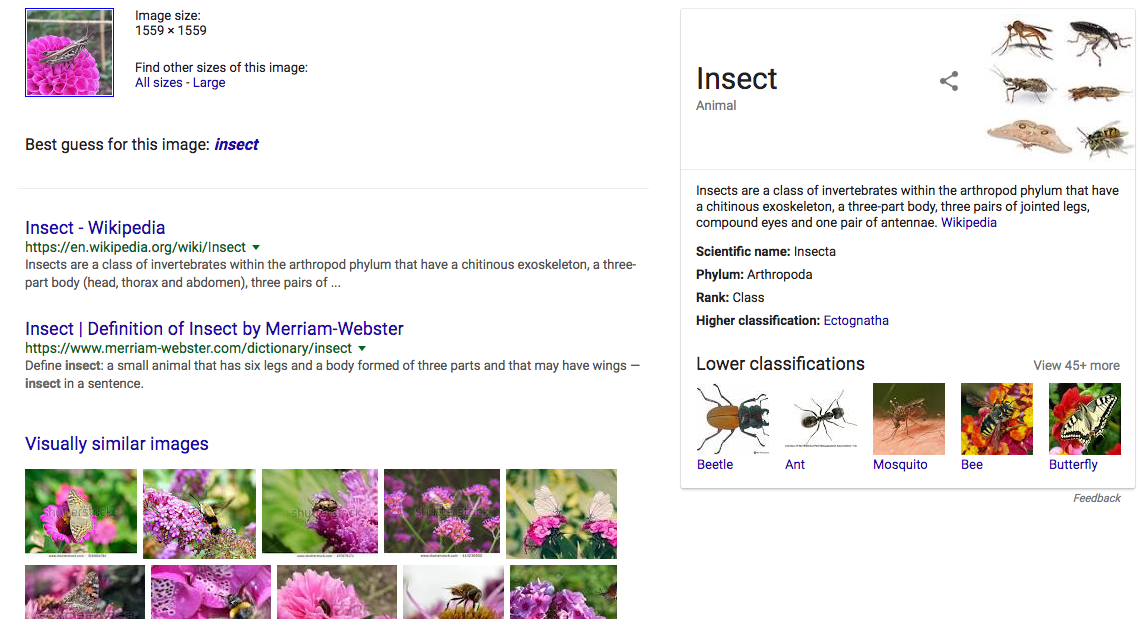

Google is doing something similar too through image matching. If you go to https://images.google.com you can try it out for yourself. You upload an image using the little camera icon and you’ll get back a set of results based on that image, like this:

From my photo it recognised the fact there is an insect on the flower and that the flower is pink.

So, how might this technology save the world?

When you think about all the things technology can do these days, and specifically your phone and photos, it’s easy to jump ahead a few years to see where this is all going.

I’ll try and tie it all together in five steps:

- Billions of people around the world take photos of plants, fungi, insects, animals.

- Photos are all tagged by date, location and type of life (with species expanding in future). All photos you’ve taken in the past will work too.

- Database of photos can be translated into a massive set of data about the location and scale of life across the planet.

- Over time this database can be monitored to see how life is growing or shrinking around the world, where certain species are or no longer exist.

- This data can be used to educate and direct the human race to act and save various species.

Of course, this technology has to fall into the right hands rather than people who unlock their laptops with their noses…